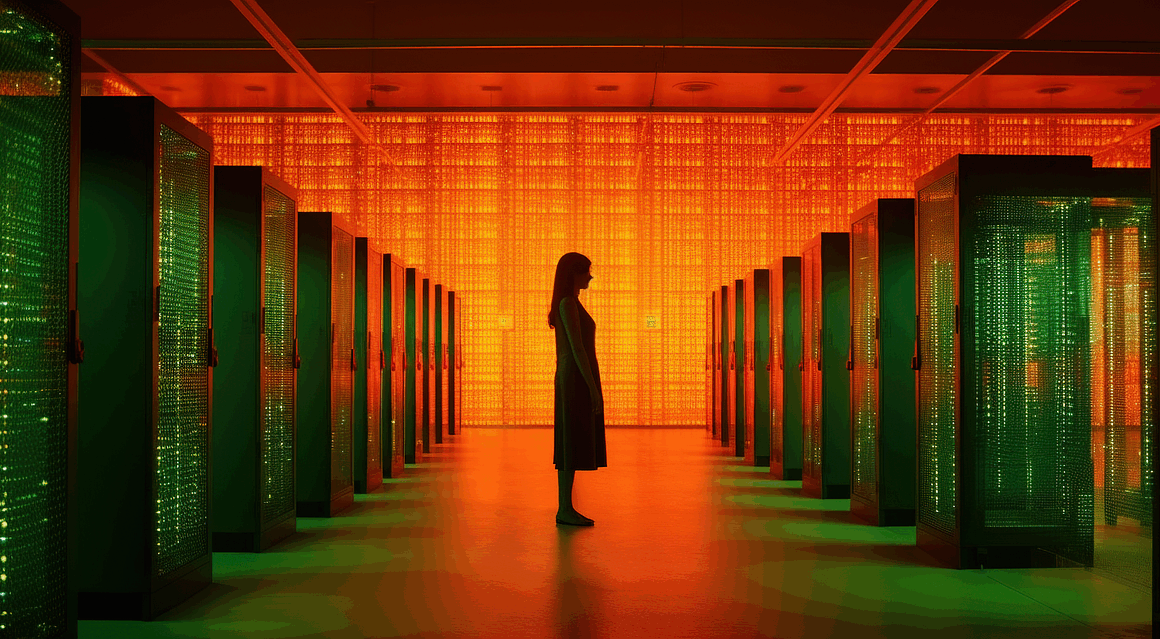

Real-Time Data Processing Architectures for Modern Businesses

In the digital era, the demand for real-time data processing architectures is escalating. Organizations are under immense pressure to harness data swiftly and effectively, thus enabling them to make informed decisions. Real-time processing offers multiple advantages including enhanced operational efficiency and improved user experiences. Businesses harness big data technologies, cloud frameworks, and advanced analytics to develop systems that can process vast streams of data continuously. This architecture promotes agility in operations, allowing for rapid response to market changes. Companies leverage these systems for applications like fraud detection, customer analytics, and predictive maintenance. Additionally, real-time data processing ensures higher data quality because it can be cleaned, validated, and categorized instantly. It allows firms to track and analyze customer behavior, turning insights into actionable strategies that lead to competitive advantages. Furthermore, organizations implementing real-time processing architectures benefit from being ahead of the curve, responding to crises or opportunities before they become evident. These elements of modern real-time data infrastructures are crucial for businesses that aim to thrive in today’s technology-driven economy, transforming raw data into valuable information skilled in decision-making.

Central to effective real-time data processing is the use of appropriate technologies and frameworks. Companies often utilize stream processing architectures, which enable the continuous input, processing, and output of data streams. Popular tools such as Apache Kafka and Apache Flink support the movement of information in real-time while providing robust capabilities for data transformation. These tools facilitate a fast-paced data flow model designed to accommodate large volumes of data without latency. Moreover, cloud platforms such as AWS and Google Cloud offer solutions that can dynamically expand based on the demand, making them ideal for real-time applications. Businesses often integrate machine learning models into these architectures, further enhancing predictive analytics capabilities. Microservices architecture is another pivotal component, allowing specific services to operate independently, thus fostering scalability. Furthermore, containerization technologies, like Docker, support running applications across diverse environments seamlessly. These technologies emphasize resilience and reliability, resulting in systems ready to handle unexpected spikes in data processing. With these tools at their disposal, organizations can effortlessly capture trends and derive insights that solidify their competitive positioning.

A significant aspect of real-time data processing is the architecture’s ability to ensure low latency in data analysis and delivery. Low latency refers to the minimal delay in processing data as it arrives, allowing businesses to respond immediately to changes in the data stream. Traditional systems often operate with batch processing, where data is collected over time and processed in intervals, resulting in delays that can hinder timely decision-making. Conversely, real-time systems utilize techniques such as event-driven architectures, where each data event triggers an immediate response or action as it arrives. This immediate processing is critical for applications like real-time fraud detection, where seconds may determine significant losses or mitigations. Another component of low latency is data queuing, which helps manage the flow of input data efficiently while ensuring that important events are prioritized over others. Organizations must strategically implement these data processing methods to strike a balance between performance and accuracy. Without a focus on low latency, companies risk missing out on opportunities or falling behind competitors who are leveraging swift data responses for strategic gain.

Security Challenges in Real-Time Data Processing

While real-time data processing brings numerous benefits, it also introduces security challenges that organizations need to address proactively. The continuous nature of data flow means that vulnerabilities can be exploited at any moment, making comprehensive security protocols essential. Companies must ensure robust encryption methods are deployed to protect sensitive information as it travels across networks. Furthermore, implementing stringent access controls helps safeguard data against unauthorized access. Real-time data processing can potentially expose vulnerabilities; thus, frequent security audits and real-time monitoring solutions are crucial in identifying and mitigating risks. Moreover, compliance with regulations such as GDPR and HIPAA can complicate real-time data processing efforts. Organizations must ensure that personal data is managed appropriately while adhering to regional legal obligations. Continuous employee training in data privacy and security protocols is imperative, as human error remains one of the greatest risks in cybersecurity. Enterprises should also consider leveraging analytics tools that can automatically flag anomalous behavior within data processing systems, as developing an agile response to threats can significantly enhance the organization’s security posture.

The integration of real-time data processing with emerging technologies like the Internet of Things (IoT) and artificial intelligence (AI) significantly expands its capabilities. IoT devices continuously generate data, and when paired with real-time processing systems, businesses can derive insights from live data feeds. For example, manufacturers can monitor equipment health in real-time, allowing them to predict failures before they occur, thus reducing downtime and maintenance costs. AI algorithms can process these streams of data effectively, identifying patterns of behavior that might not be immediately evident. The incorporation of machine learning into real-time models allows organizations to continually improve their data analysis capabilities. Furthermore, predictive analytics can lead to enhanced customer engagement strategies, as businesses can analyze purchasing behaviors live and make recommendations instantly. The amalgamation of these technologies creates a dynamic environment where data is not only collected but acted upon, leading to smarter operations. As businesses continue to invest in these advanced systems, the integration of real-time data processing with IoT and AI will redefine how industries operate, paving the way for smarter decision-making processes.

The Future of Real-Time Data Processing

As we navigate through the digital age, the trajectory of real-time data processing is set to evolve further, driving the way organizations operate. Integration of artificial intelligence and advancements in machine learning will enhance the capabilities of data systems, further reducing latency while amplifying data accuracy. The future holds innovations like edge computing, which processes data closer to the source rather than relying solely on central data centers, thus harnessing the benefits of real-time analytics at scale. Companies will prioritize agile infrastructures that can adapt to fluctuating needs, allowing them to remain competitive in fast-paced markets. Increased emphasis on customer experience will fuel the need for responsive data systems, enabling businesses to interact dynamically with their audience. Moreover, the rise of 5G technology is expected to bolster real-time data processing speed and reliability, opening up new possibilities for applications like autonomous systems and instant feedback loops, fundamentally changing how organizations function. As these trends emerge, firms will need to prioritize investments in their data infrastructure to ensure they are equipped to meet the increasing demands of the future.

In summary, real-time data processing architectures are essential for modern businesses aiming to remain competitive and responsive in a fast-evolving economic landscape. The capabilities for instantaneous data analysis not only foster informed decision-making but also drive customer engagement and operational excellence. Enterprises worldwide are realizing the value of deploying these systems across diverse industries, from finance to healthcare, adapting to unique challenges and leveraging opportunities that arise in real-time. By investing in innovative technologies, organizations can enhance their agility, ensuring they remain ahead of market demands. However, it is crucial to prioritize security and compliance across these architectures to protect against potential vulnerabilities. Ultimately, the journey towards sophisticated real-time processing is continuous, requiring a commitment to embrace evolving technologies and adapt to changing consumer preferences. Organizations that recognize the importance of real-time insights will position themselves to thrive in this data-centric era, leveraging real-time data to power their decision-making processes and strategic initiatives.